Imaging System

Imaging Components

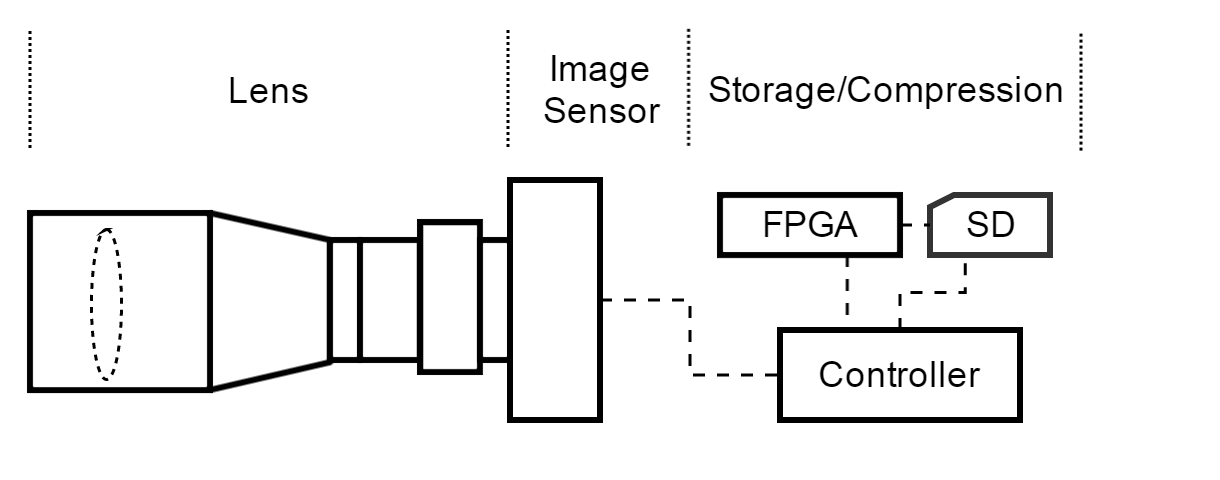

There are three main subsections of the imaging system: camera lens, imaging sensor, and storage/compression. The camera lens captures light based on the specifications of the lens and feeds the light to the image sensor. The image sensor detects the signals and then conveys the information contained in the image in a digital representation to the controller. The controller stores the raw image on the SD card until the FPGA performs the compression algorithm on the images.

Camera Lens

The lens that will be used on the cubesats is the Kowa LM100JC . This lens gives us a 5.00° viewing angle in the horizontal direction and 3.80° in the vertical direction. At an altitude of 450 km, this provides a ground field of view of 0.262 degrees longitude by 0.1747 degrees latitude. Based on the specifications of the image sensor below, this corresponds to ground coverage of about 29.1671 km horizontal by 19.3183 km vertical, which equates to a ground instantaneous field of view of about 5.62 meters/pixel in both directions. The table below provides some other key specifications of the camera lense.

| Feature | Specification |

|---|---|

| Main Sensor Size | 2/3" |

| Focal Length | 100.0 mm |

| f-number | 2.8 |

| Size | 42.0 x 70.0 mm |

| Mount | C-Mount |

| Weight | 150 g |

| Apperture Diameter | 50 mm |

Imaging Sensor

The AR1820HS : 18 MP 1/2.3" CMOS Image Sensor from ON Semiconductor is the imaging sensor that will be used in the imaging system. This sensor is a high megapixel sensor with tremendous resolution that can work will in low-light conditions. The table below provides important specifications for the image sensor.

| Feature | Specification |

|---|---|

| Pixels - Horizontal | 5184 |

| Pixels - Vertical | 3456 |

| Pixel Size | 1.25 x 1.25 μm |

FPGA

The Xilinx Virtex-5QV will be used as the FPGA that interfaces with the main controller to handle the image compression. This FPGA is a defense-grade, space-hardened component that is commonly used in Aerospace and Defense applications. The Xilinx Virtex-5QV also contains a high number of block RAM blocks and configurable logic blocks. This FPGA will be loaded with the intoPIX JPEG2000 IP core for FPGAs.

Storage Device

Ruggedized SD storage media from Delkin Devices will be used for the controller device and most importantly to handle the image data. The CE16TFPHK from Delkin is a 16GB memory device capable of operating in extreme temperatures and environmental conditions. The controller will store the raw image on the memory device and then the FPGA will use the raw data to generate a compressed image which will also be stored on the memory device until it can be downlinked to the earth stations. The size of the device allows for at least 2 orbit's worth of images in the event that something prevents the satellite from downlinking the data on one of the passes.

Operation

Image Capture

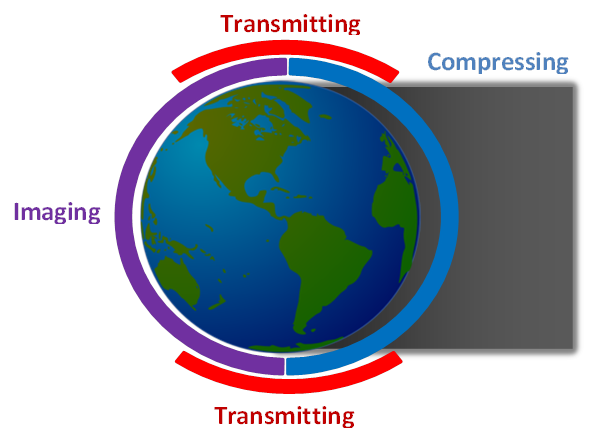

Based on the orbit of the cubesats and the specifications of the on-board camera equipment, the cubesats need to take pictures once every second. However, in order to reduce the amount of bandwidth/storage required, images are captured only during the half period that has sunlight, as illustrated in Figure 2. With a period of 93.587 minutes (5615 seconds) this leads to an image count of approximately 2807 pictures per orbit of the earth.

Each image is 17,815,904 pixels and is recorded as a RAW-12 image, meaning 12-bits are used per pixel. Thus, each image is 214,990,848 bits uncompressed.

Image Processing, Storage, and Compression

Given the significant amount of data that each image requires, it is necessary to perform compression on the data both for storage and transmission purposes. However, even low compression ratios using wavelet technology such as JPEG2000 does not compress the data enough, and high levels of compression can sacrifice resolution and effectively image quality. Thus a novel mechanism for tiered compression levels is implemented.

The tiered compression concept is based on the fact that a majority of the earth, ~71%, is covered in water [1] and significant portions of the land sections are non-urban, and don't require as high fidelity. A recent study showed that less than 1% of the earth can be considered urban, leaving approximately 28% of land area that is non-urban [2]. Thus three categories are employed using three different compression ratios to apply to the pictures that are captured, as provided in the table below. Though not every orbit will contain these same percentages of the three categories, it expected that over time the law of averages will apply. Thus these percentages are used to determine the capacity needed to downlink this data to the Earth Stations.

| Category | Type | Percentage of Images | Compression Ratio |

|---|---|---|---|

| 1 | Urban | 1% | 10:1 |

| 2 | Land/non-urban | 28% | 20:1 |

| 3 | Water | 71% | 50:1 |

In order for the FPGA to know what compression ratios to use for each image, a category table is transmitted to the satellite every time it passes the North Pole uplink facility. The computer system at the uplink facility has the ability to determine where the satellite will be each time a picture is taken, allowing it to generate the category table. This category table is a simple text file that contains the category and the number of consecutive images that fall into that category. Each line in the file indicates a transition to a different category. The table below provides the amount of data per orbit, on average, that needs to be transmitted back to Earth, which totals 17,620,649,903 bits or roughly 2.05 GB of data.

| Category | Pictures per Orbit | Total Uncompressed Size per Orbit (bits) | Total Compressed Size per Orbit (bits) |

|---|---|---|---|

| 1 | 28 | 6,019,743,744 | 601,974,375 |

| 2 | 786 | 168,982,806,528 | 8,449,140,326 |

| 3 | 1903 | 428,476,760,064 | 8,569,535,202 |

| Total | 17,620,649,903 | ||

References

[1] Perlman, Howard, How much water is there on, in, and above the Earth? [Online], United States Geological Survey, 2015.

[2] Potere, David, Annemarie Schneider, Shlomo Angel, Daniel L. Civco, Mapping urban areas on a global scale:which of the eight maps now available is more accurate?, International Journal of Remote Sensing, Vol. 30, No. 24, 20 December 2009.